REFUGEE SCREENING: A BRIEF INTRODUCTION (AND A REQUEST FOR EQUIPMENT)

In an increasingly chaotic world, people fleeing life-threatening chaos—refugees—are a growing group. Today over 65 million displaced people—representing the biggest wave of mass displacement since World War II—seek safer, better lives in new countries, cultures, languages, fields, and communities. Many are climate refugees—people displaced by the effects of climate change. This group will probably grow by an order of magnitude in the coming decades, unless countries and corporations achieve global collective action to reduce carbon emissions and otherwise stem the effects of what we are already experiencing as climate departure.

Refugees face many challenges, from life-threatening violence and other disaster conditions at home, to treacherous routes toward safety, uncertainty in the asylum process, detention in unsafe camps, deprivation in basic terms like food and housing, patchwork integration resources, and ignorance and prejudice in their new communities that at times turns violent. As right-wing politicians link the fear driving that prejudice with the popular fear of (statistically rare) Islamic terrorism in the West, pressure mounts to introduce refugee screenings that discriminate explicitly against Muslims, people from certain countries, or people with political sympathies that threaten the status quo—terrorists.

So a new technology promising to screen out terrorist refugees at a rate better than chance but less than perfection sounds great. But according to the math, such tools tend to hurt security and innocent people alike. Here’s why.

Bayes’ Rule

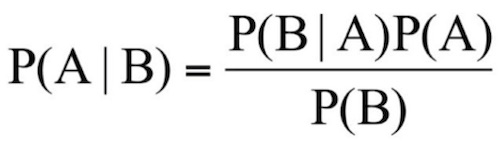

A mathematical theorem called Bayes’ Rule says:

where A and B are events and P(B) ≠ 0. P(A) is the probability of observing event A without regard to the probability of observing B, and vice-versa. P(A | B) is the conditional probability, or the probability of observing event A given that you also observe event B. For example, P(A) might be the probability that you have an adverse drug reaction and P(B) might be the probability that you’re pregnant. We know intuitively that pregnant women have more adverse drug reactions than the general population, so P(A | B) is different from P(A) or P(B).

In other words, Bayes Rule says weird people are weird—the young punk with purple hair is also more likely than the general population to have a nose ring. Vulnerable people are vulnerable—a girl who’s abused is more likely to grow up to have an abusive partner. Sick people get sick—immunocompromised patients (people with autoimmune disorders, cancer, HIV, or others who take immune suppressants) are more likely than others to have serious complications from the flu. In general, probabilities are conditional rather than random. Subgroups matter.

More specifically, Bayes Rule gives us a logic for thinking about probabilities that takes base rates (or rates of occurrence in a larger population) into account by breaking events down into four categories. Any screening, be it for a medical condition, crime, or something else, produces:

1. false positives (people who are healthy or innocent, but the screening says aren’t),

2. true positives (people who are ill or guilty, and the screening says so),

3. false negatives (people who are ill or guilty, but the screening says are fine), and

4. true negatives (people who are healthy or innocent, and the screening says so).

It’s generally safe to assume all of these categories are populated with non-zero values, because existing tools for assessing wellness or investigating crime only correctly identify ill/guilty people at rates above chance but below perfection.

Table 1: (Click table to enlarge) Categories of outcomes of a screening test that Bayes’ Rule helps us understand.

This means that for very rare illnesses or crimes (problems with low base rates), such as syphilis or espionage/terrorism, screening tests may produce very large numbers of false positives—misidentifying lots of people as possibly ill/guilty. This is the case with a common syphilis test, for example. One in three “positives” might not actually have the disease; out of those false positives, a disproportionate number have an unrelated disease instead (lupus).

But people tend to ignore false positives and focus only on true positives when they think about the accuracy rates of screenings. This selective attention produces a logical error called the base rate fallacy. As a species, we have poor intuition about rare events—especially when given information about a specific case of possibly detecting them. The same basic problem applies to screenings for extremely rare crimes such as espionage/terrorism…

Polygraph Programs

At the request of Congress, some of America’s top scientists in the National Academy of Sciences applied Bayes’ Rule while reviewing the scientific evidence available on a problem similar to refugee screening—the problem of screening National Lab scientists for spies using polygraph (or “lie detector”) programs. Both problems are about trying to identify a very bad subset of actor—terrorists in the refugee screening case, and spies in National Labs—who pops up at a very low base rate. In both problems, you really don’t want to harm the larger population—be it vulnerable refugees entitled to sanctuary under international law and common decency, or nuclear physicists without whom your National Labs would suck. So this might seem at first to be a classic liberty versus security dilemma, where we must prioritize either our principles of fairness, due process, and human rights—or our safety.

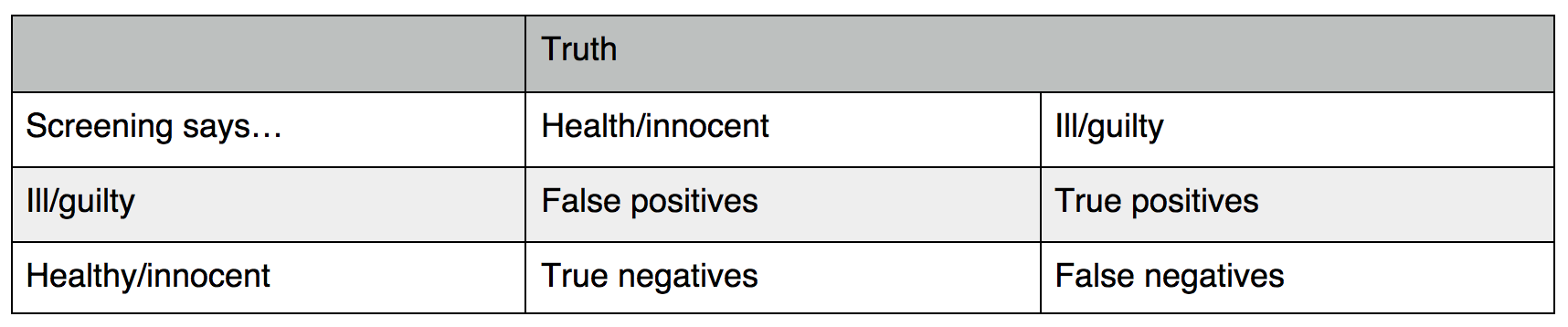

It’s not. The math shows that liberty-security dichotomy offers a false choice—and a perfect example of the base rate fallacy at work. In a hypothetical population of 10,000 National Lab employees including 10 spies—assuming a much higher base rate of espionage than one would expect, in order to be generous to proponents of polygraph screening programs—polygraphing everyone would miss two spies (20%) while unfairly implicating 1,598 innocent people. That’s nearly 16% of innocent people, or more than one in seven colleagues. That means about 99.5% of implicated people are innocent. And that’s assuming—again being generous—that “lie detectors” have better accuracy (80%) than the evidence suggests.

Table 2: (Click table to enlarge) Results of polygraph interpretation assuming better than state-of-the-art accuracy in a hypothetical population of 10,000 people including an unrealistically high number of spies (10). Modified from National Research Council 2003.

So scientists agree that better than state-of-the-art polygraph screening hurts innocent people and security alike. It does this according to Bayes’ Rule by producing large numbers and proportions of false positives as well as meaningful numbers of false negatives, or guilty people not implicated by the screening. Investigating false positives takes limited resources like time and money away from looking for false negatives. Moreover, security policies that degrade trust—e.g., by causing considerable trouble for large numbers of innocent people—probably degrade security according to procedural justice research, which shows that fairness perceptions matter for how people treat authorities in ways that in turn matter for security (e.g., you don’t talk to cops when they’re known thugs).

Meanwhile, the bad guys—here, spies who are likely to worry for legitimate reasons about being targeted for spying—are also probably more likely to look into beating espionage screenings. All it takes for polygraphs is a quick Google search using an anonymizing tool like Tor or a VPN, to spot the top hit antipolygraph.org, which explains how polygraphs are interrogation games easily defeated by simple countermeasures such as keeping your mouth shut (saying as little as possible) and squeezing your butt (in response to “control” questions to which you are expected to lie, to manipulate the baseline of comparison for “relevant” questions about espionage). So the screening tool’s accuracy rate is probably lower for the subgroup that matters most, increasing false negatives under field conditions.

Overall, Bayes’s Rule shows polygraph screening programs expend limited resources making and then attempting to help sort out huge haystacks (innocent people who “failed”) containing few needles (actual spies)—while some needles (spies) have already fallen through the cracks (“passing” the screening). Thus polygraphs hurt both liberty and security.

Polygraph screenings of National Lab employees are one form of mass surveillance. Mass surveillance, in public health and security contexts alike, is collection of data from an entire population or large subset thereof for the purpose of identifying associations, flagging impending emergencies, measuring progress toward goals, and seeing other patterns. Larger-scale forms of mass surveillance, such as bulk data collection and search, are more common and more commonly known than lie detection today…

Other Mass Surveillance Programs

In these larger mass surveillance programs like PRISM, the U.S. National Security Agency taps entire countries’ telecommunications (i.e., phone, Internet, and television traffic) by breaking into fiberoptic cables. State hackers with the NSA and partner security services like Germany’s BND then search this bulk data for selector terms ostensibly meant to help gather intelligence to prevent terrorism—terms that have included phone numbers and email addresses of companies and politicians who are political allies but economic competitors, raising questions about economic espionage through mass surveillance.

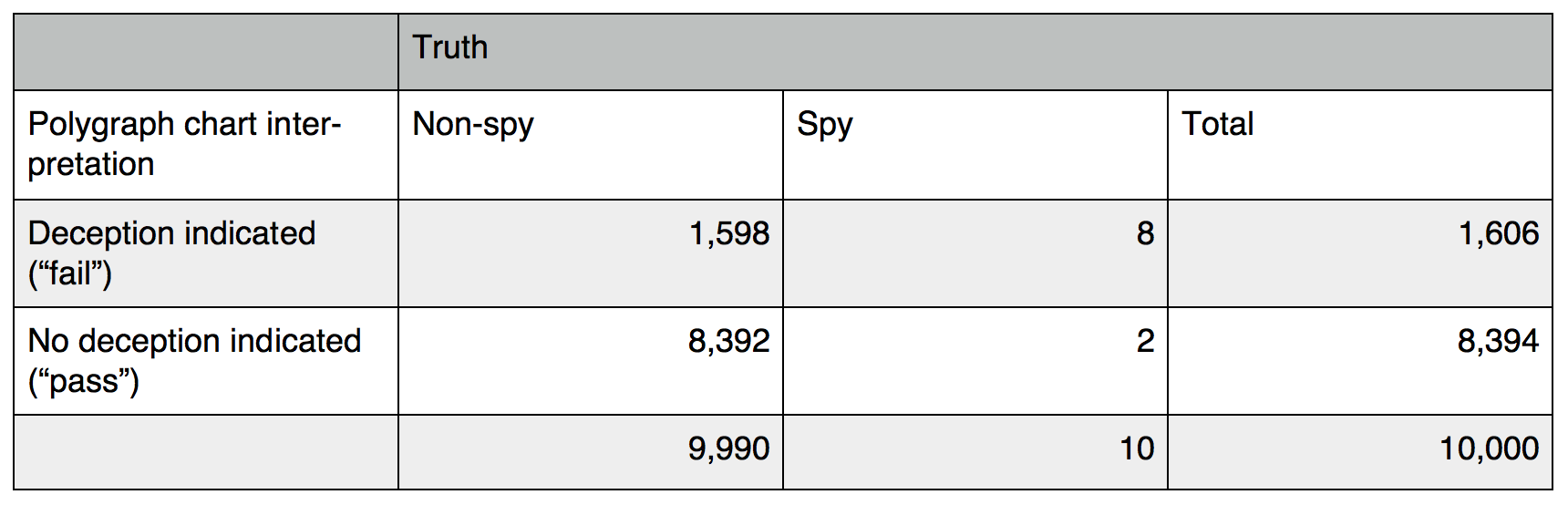

Bayes’ Rule applies to this form of mass surveillance, too. The math shows again that mass surveillance backfires—harming the security it’s intended to advance, while also harming innocent people. In other words, data mining for terrorism doesn’t work because it creates a needle-in-haystack problem. The haystack of overwhelmingly innocent but implicated people is too big to investigate in a thorough, fair, and timely fashion, in order to prevent crime and uphold rule of law. Meanwhile, some needles (terrorists) escape detection in the screening. Sifting through the haystack (overwhelmingly innocent people wrongly implicated by the screening) for the needles (terrorists correctly implicated by the screening) bleeds resources needed to find the missed needles (terrorists not detected by the screening).

Table 3: (Click table to enlarge) Results of mass surveillance assuming 80% accuracy in the total U.S. population.

Since the attacks of Sept. 11, 2001, 546 individuals have been arrested for terrorism (true positives), and there have been 28 deadly domestic terrorism attacks (false negatives). We can extrapolate from these numbers assuming more than one person was involved in each plot, there is only partial overlap between arrests and executed attacks, and there are still some terrorists in America who have neither been implicated through mass surveillance nor acted yet. Doing so suggests a hypothetical universe in which—again making generous assumptions in order to give proponents of mass surveillance the benefit of the doubt—there are 1,000 terrorists in a population of 325 million (a higher base rate than one would expect given the data), and mass surveillance detects them with 80% accuracy and wrongly implicates only .01% of innocents (a higher accuracy rate and lower false positive rate than one would expect).

Under these better than best possible hypothetical conditions, 99.97% of people implicated under mass surveillance are innocent. In this scenario, further investigation of all 3,249,990 implicated Americans would be needed to find the 800 terrorists who can be implicated with this screening. The costs of these investigations of innocent people include direct investigative costs, opportunity costs (the investigators could be doing other stuff), civil liberties, and the degradation of the fabric of a free society built on trust. Those resources are then unavailable for finding the 200 missed terrorists.

For security purposes, flipping a coin would be better. This is true under the 40% accuracy rate that mass surveillance more likely actually has in identifying terrorists who make an effort to hide. (After all, sneaky people are sneaky.) It would also be true if mass surveillance had a better-than-best-possible accuracy rate of .90 and a misidentification rate of only .00001.

So mass surveillance backfires, harming the security it is intended to protect in addition to harming innocent people. This is a mathematical argument—not a political one. But some of America’s best statisticians work for agencies like the NSA that are engaged in (what is for them lucrative) mass surveillance. Combined with the historical development of these programs and their alternatives, this raises questions about the intent of these programs, and subsequently about the ability of the U.S. government to address its own waste, fraud, and abuse. Perhaps rolling back mass surveillance in a police state is a losing game, and we have lost it.

In any event, just as Bayes’ Rule applies to screening National Lab employees for spies and ordinary telecommunications consumers for terrorists using mass surveillance programs such as polygraphs and PRISM, it also applies to screening people at transportation hubs or border checkpoints. People like refugees…

Next-Generation Polygraphs

Scientists agree there’s no such thing as a “lie detector.” There is no unique physiological, verbal, or behavioral lie response to detect. Nor is there a unique response or pattern of responses associated with bad intent—a criminal respiration rate or terrorist heart rhythm. Our friends and foes have the same eyes, hands, organs, and dimensions—tickle when laughed the same—and bleed the same when pricked—as the best psychologist in history long ago noted. Nonetheless, with $3-4 billion/year flowing mostly from U.S. federal government coffers into the lie detection industry, researchers have continued their age-old quest for a psychic X-ray that can see intent—as if seeing directly into people’s heads and hearts.

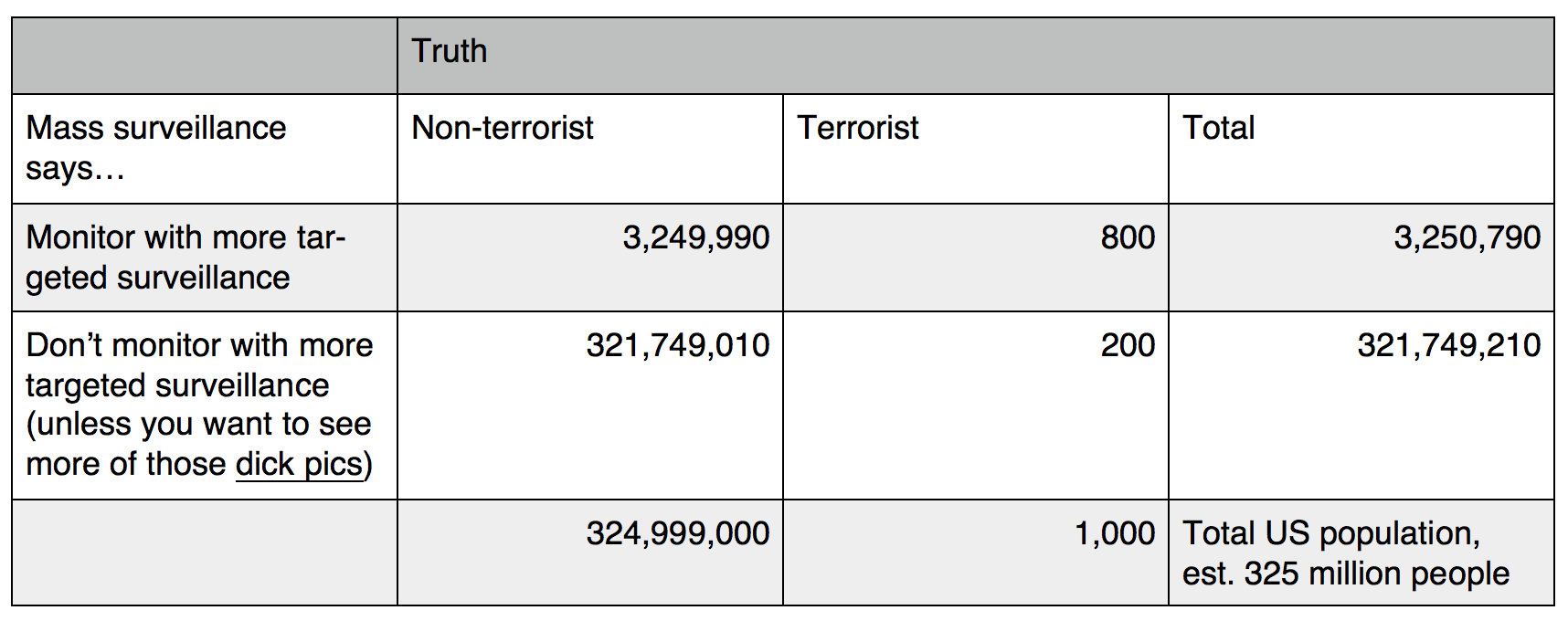

This quest has produced a few next-generation polygraph programs, including the TSA’s FAST (Future Attribute Screening Technology) and AVATAR (Automated Virtual Agent for Truth Assessments in Real-time), developed by the U.S. National Center for Border Security and Immigration (BORDERS). These wireless, next-generation polygraphs use physiological, verbal, and behavioral cues to identify liars at rates higher than chance but below perfection—just like polygraphs. The programs appear to have achieved comparable stated accuracy rates to polygraph programs—around 70% under lab conditions. In the context of the refugee crisis that is likely to worsen as climate change and related chaos displace tens to hundreds of millions more in the coming decades, this means that without preventive policy action, large numbers of particularly vulnerable people are probably going to be subjected to next-generation polygraph screenings that hurt both innocent people and the very security they are ostensibly intended to advance.

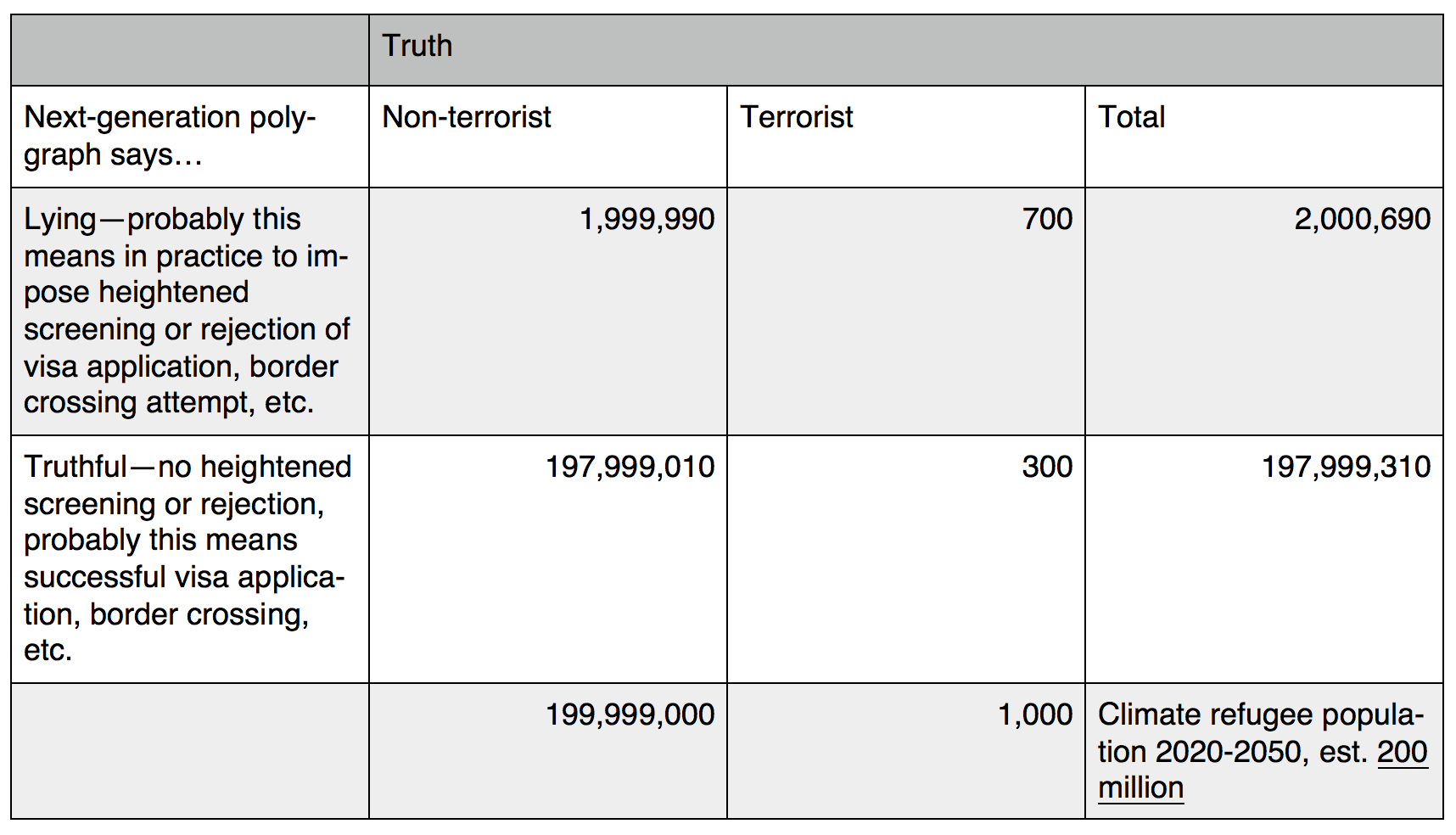

Table 4: (Click table to enlarge) Results of next-generation polygraph screening assuming 70% accuracy in a future hypothetical climate refugee population of 200 million refugees with an unreasonably high hypothetical base rate of terrorists (1,000). The screening unfairly implicates nearly two million innocent people.

This table shows how next-generation polygraph screenings affect a hypothetical population of 200 million future climate refugees including 1,000 terrorists. This is an even higher base rate of terrorism than in the previous examples, and is far higher than one would expect given the evidence. It also assumes an accuracy rate of 70%, which is again better than one would expect based on the available scientific evidence. And it again assumes that the screening wrongly implicates only .01% of innocents, a lower false positive rate than one would expect. These assumptions are extremely generous to proponents of next-generation polygraph screening programs.

Under this better than best possible refugee screening scenario, of the nearly two million people implicated as possibly being terrorists, over 99.96% are innocent. This is a problem because rejecting their visa applications or border crossing attempts may result in their deaths. Flipping a coin would again be a better screening for security purposes alone.

But flipping a coin to decide which desperate people fleeing life-threatening chaos can seek refuge on safer shores would be grotesquely immoral in a way one does not need Bayes’ Rule to understand. Such immoral acts might backfire and contribute to the very radicalization they are intended to check.

So too might screenings with lots of false positives backfire for similar reasons. In the polygraph screening context, falsely accusing about 16% (or over one in seven) of your employees of being fishy might be perceived of as unfair, demoralizing and sowing mistrust in your workforce—thus hurting security because people then tend to share information and otherwise cooperate less with authorities who appear to be unfair. In the mass surveillance context, investigating millions of innocent Americans—after surveilling hundreds of millions—might lead people to dampen the political expression on which free societies rely, as research shows it has. And in the refugee screening context, wrongly rejecting millions of visa applications or border crossing requests by desperate people fleeing chaos the West has helped create might actually fuel the very terrorism it is meant to dampen, in part by decreasing trust in and information-sharing with authorities, and in part by victimizing innocent people who are already desperate. When we degrade our moral authority by violating rule of law, we harm our integrity and our security alike.

Next Steps

Experienced and independent scientists/hackers/activists, like myself, need equipment access to prevent human rights abuses on the horizon. Future research should hack real polygraph and next-generation polygraph technologies used in federal security screening programs, including those recently field-tested in the U.S. (FAST) and the U.S. and Europe (AVATAR). The first-level hack should establish how innocent individuals can protect themselves with countermeasures from becoming false positives. A next-level hack might take resultant evidence on the vulnerability of these screenings—underscoring the threat of false negatives—to country-level or larger legislative bodies. Subsequent advocacy might seek recognition from a body like the European Court of Human Rights (ECHR) of the human right to safety in this context. That means safety for individuals from worse-than-random implication as spies or terrorists, safety for societies from security programs that demonstrably backfire on the population as a whole, and safety for refugees in relatively stable countries while the world changes faster than we have seen before in human history.

– – –

Paintings done by author. (top) “Southern Reflection”, (middle) “Needle in the Haystack”. To read more about supporting this research, visit Vera’s website at https://verawil.de/2016/11/the-naked-polygraph-project.